PRO Updates and Exports

Full Database Schema Transfer from Server to Server via Database Backup (available in MIT)

The final executors of all operations are

pg_dumpandpsql. If you created a backup on one server and are restoring it on another, make sure you are upgrading the PostgreSQL version with the commandpsql -V. Downgrading the version will most likely result in a failed backup restoration.This does not apply to partial table transfers using Totum's internal tools.

Creating a Dump

Replace shema_name with your schema name (default is totum):

bin/totum schema-backup --schema="shema_name" prod_dump.sql

You can exclude logs and automatically compress to gz:

bin/totum schema-backup --schema="shema_name" --no-logs --gz prod_dump.sql

You can exclude certain tables:

bin/totum schema-backup --schema="shema_name" --no-content="table_name_1,table_name_2" prod_dump.sql

Restoring a Dump

The larger the database dump, the longer the loading process will take. Interrupting the operation in any way will render the solution inoperable.

Ensure that no one is working with the target database during the loading process!

Replace SCHEMA_NAME_FOR_REPLACE with the target schema name:

bin/totum schema-replace prod_dump.sql SCHEMA_NAME_FOR_REPLACE

Executing this operation will completely replace the database schema with the schema from prod_dump.sql.

If creating a new schema, replace SCHEMA_NAME_FOR_REPLACE with the desired schema name and HOST_FOR_SCHEMA with the connected HOST (specified without protocol):

bin/totum schema-replace prod_dump.sql SCHEMA_NAME HOST_FOR_SCHEMA

By default, cron jobs are disabled during loading, but this can be overridden:

bin/totum schema-replace --with-active-crons prod_dump.sql SCHEMA_NAME_FOR_REPLACE

Partial Solution Transfers (PRO only)

Totum-PRO has a mechanism that allows exporting and importing sets of tables with or without data.

This way, you can transfer parts of a solution from one schema to another.

This same mechanism is used for updating core tables.

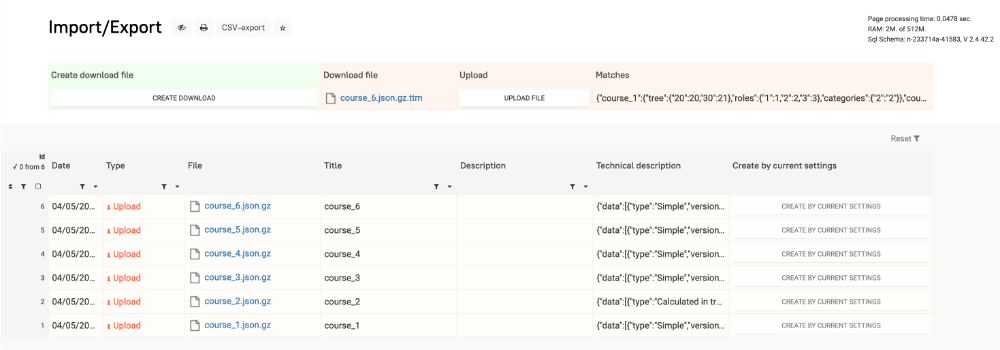

The main table for creating and loading is located in System Tables — PRO — Import/Export.

Tables are exported and imported by

name. Therefore, if you have different tables with the samenamein two databases, the transfer will fail, as the table with the matchingnamewill be overwritten during import.If you want to create an export for mass distribution, use a prefix of 3-4 letters and two underscores before table names during design. For new system tables, we use

ttm__.

Reserved Names

Totum has a number of system tables whose name cannot be used for user tables:

auth_log

calc_fields_log

calcstable_cycle_version

calcstable_versions

crons

log_structure

notification_codes

notifications

panels_view_settings

print_templates

roles

settings

table_categories

tables

tables_fields

tree

users

and tables with the prefix ttm__

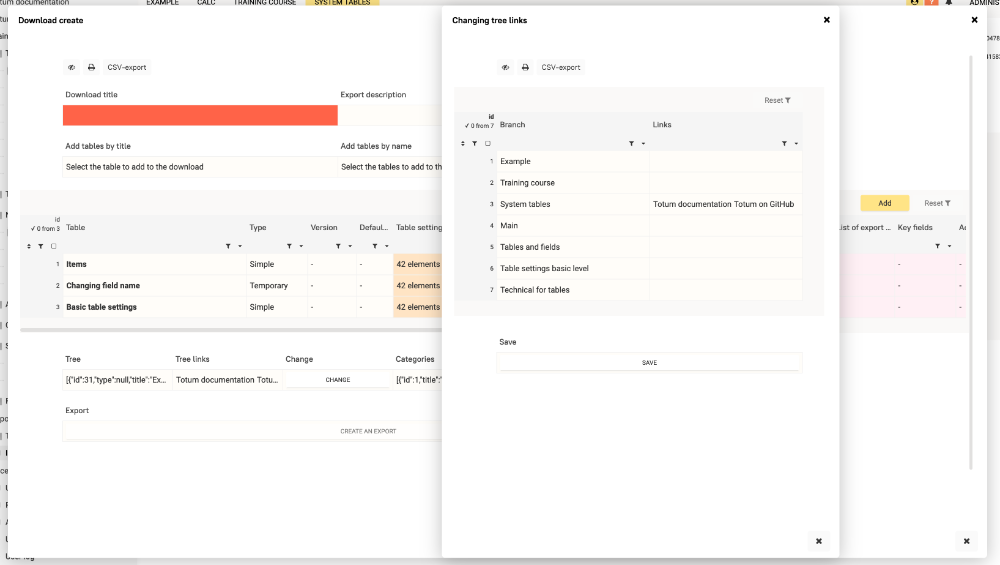

Creating an Export

To create an export, click CREATE EXPORT — you need to select which tables will be included, which settings of these tables will be exported, and which fields will be included, as well as data export settings.

Also, provide an Export Name and, if necessary, a Description.

Based on the selected tables, elements of the Tree, Roles, and Table Categories will be added to the export.

When you click CREATE EXPORT, a row containing a gzip packed json with the additional extension .ttm will be added to the System Tables — Import/Export — Import/Export table.

This file can be downloaded and uploaded to another schema.

Uploading via Interface

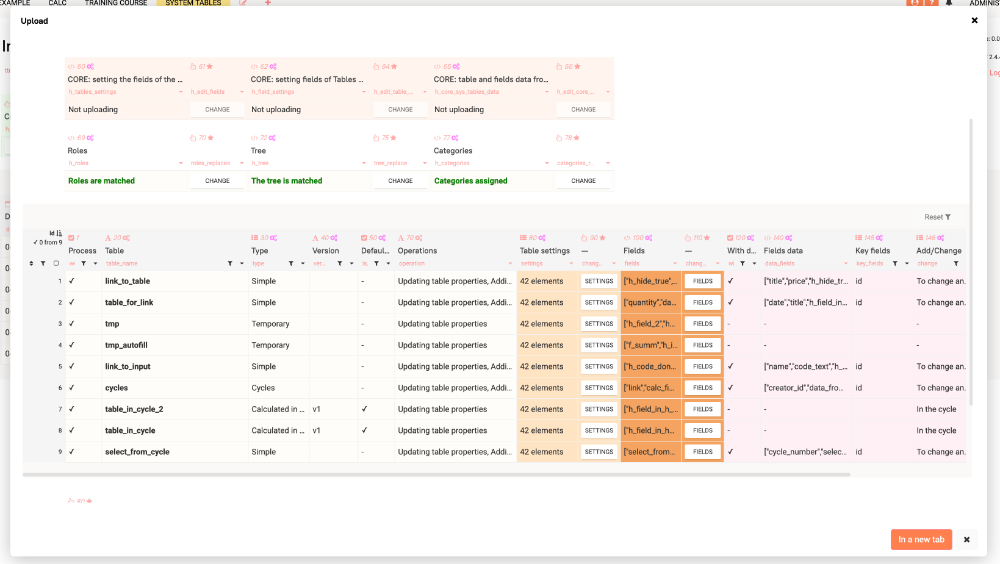

To upload, upload the export file to the Upload File field and click UPLOAD FILE. The schema is not loaded at this moment — the file with data will be unpacked, and the upload settings window will open.

Be cautious when uploading a file from an unverified source, as it may contain changes to base tables or malicious code. Do not proceed with subsequent steps if you cannot analyze the actions of these codes or are unsure of the provider!

Type of Action Performed

When uploading, each table can be added, its fields updated, and data in the table updated.

If the name of the uploaded table matches the name of a table in the schema, the table settings and field settings will be updated.

You can control which settings and fields will be updated.

Field deletion can only be performed through executable code — it can be seen in the Code field of each table.

Data Mapping Settings within Tables

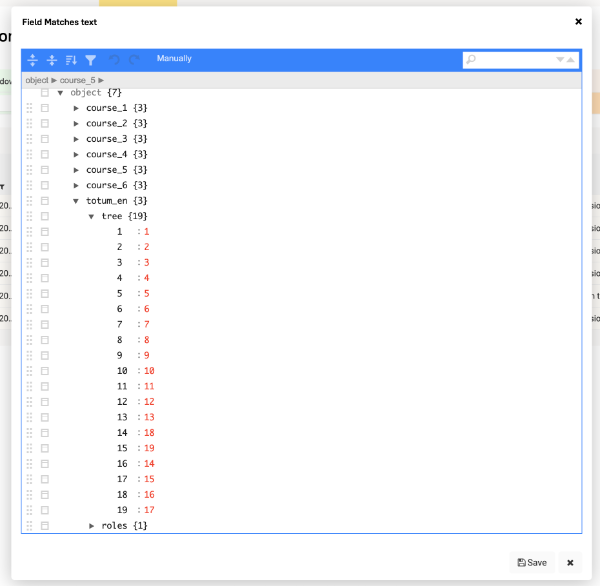

When uploading data, the type of action and key fields matter.

Based on the key field, Totum determines the correspondence between the data already contained in the table and the data being uploaded.

The type determines the action — add and update, add only, update only.

Key fields must be included in the list of fields from which data is exported.

Role, Tree, and Table Category Mapping

When uploading, you need to specify the mapping of Roles, Tree, and Categories in the schema being uploaded to and in the uploaded file.

For example, the role Manager in the export and upload schema may have different ids (similarly for tree folders and table categories).

You need to specify the mappings or indicate that a new entry should be created.

During the upload, these mappings will be recorded in the matches field of the Import/Export table for subsequent uploads.

The search for matches is based on the upload file name.

Matches for Subsequent Uploads

The matches field of the Import/Export table defines automatic mappings of roles, tree, and categories for uploaded schemas.

The totum_** array is technical and necessary for the correct installation of updates.

The field contains an array:

keys—idnumbers in the uploaded schemavalues—idnumbers in the current schema

If a match is not found, the uploaded element is created, and matches is automatically updated.

Codes on Load

The export may contain codes that will be executed upon loading.

The codes are executed after loading the settings, fields, and table data.

Several variables are passed in the code:

$#insertedIds—idof the added rows, if data was added.$#changedIds—idof the changed rows, if data was added.$type—installif the table is added to an empty DB schema.updateif the table is added to an already existing DB schema.

$#is_table_created—trueif the table is added to the schema for the first time.

Codes on load are executed without any access restrictions!

Using bin/totum for Schema Updates

To update the schema, you can use the bin/totum console utility.

Upload the export file

**.gz.ttmto the server.Execute from the root folder of Totum:

To update all schemas:

bin/totum schemas-update MATCHES_NAME PATH_TO_FILETo update a single schema:

bin/totum schema-update MATCHES_NAME PATH_TO_FILE --schema="SCHEMA_NAME"

MATCHES_NAME— if the load is performed for the first time for the schema and no match is found inMatches, then all elements ofTree,Roles, andCategorieswill be processed as added except for theCreatorrole, and a match record will be created inMatcheswith the specified name.If during the creation of the export you checked the Match existing

f_match_existingsfield, then one-to-one matches will be created upon loading.

Using Updates to Create a Test — Prod Link

If a replicable solution is intended or the project cannot be modified on the production server, then:

Create

PRODand develop the solution on it. If you developed onTEST, start from step 3.—

TEST/PROD located on different servers

2.1 Create an export from

PRODusing the console command:bin/totum schema-backup --schema="schema_name" prod_dump.sql2.2 Deploy a clean

TESTon a separate server/folder and database, and load the created export:bin/totum schema-replace prod_dump.sql totumTEST/PROD located on the same server in different schemas

2.1 Execute:

bin/totum schema-duplicate NAME_PROD_SCHEMA NAME_NEW_TEST_SCHEMA URL_FOR_TESTCreate a master export from

TEST— this export will be the main one, and the file name will be used inmatchesinPROD.- When creating this export, you need to check the Match existing

f_match_existingsfield. If it is checked, then upon loading intoPROD, the tree elements, roles, and table categories will be automatically matched one-to-one.

- When creating this export, you need to check the Match existing

For updates:

5.1 Develop on

TEST.5.2 Update the master file using

Create from current settings.5.3 Load the file into the schema or schemas through the interface or

bin/totum.If your TEST and PROD are on the same server in different

schema, and you are loading throughbin/totum, you need to exclude TEST from the update:bin/totum schemas-update MATCHES_NAME PATH_TO_FILE --exclude="schema1,schema2" --exclude="schema3"`

5.4 If you developed on

TESTand are transferring the project toPROD, create a master export fromTEST, create a cleanPROD, and load the master file onto it.Matcheswill be created by the file name.

! In case of transferring data encrypted through strEncrypt to another server, you need to copy the contents of the

Crypto.keyfile from the root of the installation/home/totum/totum-mit/Crypto.keybecause a new key is generated randomly when creating a new server.